This is an old revision of the document!

QCBS R Workshops

This series of 10 workshops walks participants through the steps required to use R for a wide array of statistical analyses relevant to research in biology and ecology. These open-access workshops were created by members of the QCBS both for members of the QCBS and the larger community.

Workshop 8: Multivariate analyses

Developed by: Bérenger Bourgeois, Xavier Giroux-Bougard, Amanda Winegardner, Emmanuelle Chrétien and Monica Granados. (Material in R script obtained from: Borcard, Gillet & Legendre (2011). Numerical Ecology with R. Springer New York.)

Summary: In this workshop, you will learn the basics of multivariate analyses for your own data. You will learn to choose appropriate distance metrics and transformations for clustering, unconstrained ordinations, and to perform Principal Component Analysis, Correspondence Analysis, Principal Coordinate Analysis and Nonmetric MultiDimensional Scaling, to find patterns in your community composition data!

Link to associated Prezi: Prezi

Download the R script and data required for this workshop:

Make sure to load the following packages (see how in the R script):

- | Load the required packages and functions

install.packages("vegan") install.packages("gclus") install.packages("ape") library(vegan) library(gclus) library(ape) source(file.choose()) # use coldiss.R which you have downloaded to your own directory

What is ordination?

Ordination is a set of methods for depicting samples in multiple dimensions (Clarke and Warwick 2011) and often feels like a catch-all term in ecological statistics. Ecologists are often told to “run a PCA” in the face of complex and messy multivariate data. R code for ordination techniques is readily available and relatively straight-forward to implement for most data. Interpretation of ordination analyses can be more difficult, especially if you are unsure of the specific questions you wish to explore with a particular ordination method. As such, while ordination methods are very useful for helping simplify and make sense of multivariate data, careful consideration of why the methods are being used and which ones are most appropriate is necessary for strong ecological analyses!

When you use an ordination method, you are taking a set of variables and creating new principal axes along which samples (sites etc.) are scored or ordered (Gotelli and Ellison 2004), in order to reduce or simplify the data, i.e. to create new axes that represent most of the variation in the data. As an example, a dataset with 24 variables may be reduced to five principal components that represent the main patterns of variation amongst samples. Unconstrained ordination methods are generally not frameworks for hypotheses testing, rather they are best suited for exploratory data analysis. The different types of ordination can be useful for many different questions; see (the Ordination Website) for an overview of different types of ordination).

Getting started with data

We will use two main data sets in the first part of this workshop. “DoubsSpe.csv” is a data frame of fish community data where the first column contains site names from 1 to 30 and the remaining columns are fish taxa. The taxa columns are populated by fish abundance data (counts). “DoubsEnv.csv” is a data frame of environmental data for the same sites contained in the fish community data frame. Again, the first column contains site names from 1 to 30. The remaining columns contain measurements for 11 abiotic variables. Note that data used in ordination analyses is generally in wide-format.

- | Load the Doubs Species and Environmental Data

#Species community data frame (fish abundance): “DoubsSpe.csv” spe<- read.csv(file.choose(), row.names=1) spe<- spe[-8,] #Site number 8 contains no species and so row 8 (site 8) is removed. Be careful to #only run this command line once as you are overwriting "spe" each time. #Environmental data frame: “DoubsEnv.csv” env<- read.csv(file.choose(), row.names=1) env<- env[-8,] #Remove corresponding abiotic data for site 8 (since removed from fish data). #Again, be careful to only run the last line once.

1. Explore the data

1.1 Species data

We can use summary functions to explore the “spe” data (fish community data) and discover things like the dimensions of the matrix, column headings and summary statistics for the columns. This is a review from

Workshop 2.

- | Explore DoubsSpe

names(spe) #see names of columns in spe dim(spe) #dimensions of spe; number of columns and rows str(spe) #displays internal structure of objects head(spe) #first few rows of the data frame summary(spe) #summary statistics for each column; min value, median value, max value, mean value etc.

Look at the species’ distribution frequencies.

- | Species distribution of DoubsSpe data

#Species distribution (ab <- table(unlist(spe))) #note that when you put an entire line of code in brackets like this, the output for that operation is displayed right away in the R console barplot(ab, las=1, xlab="Abundance class", ylab="Frequency", col=grey(5:0/5))

Can see that there is a high frequency of zeros in the abundance data.

Can see that there is a high frequency of zeros in the abundance data.

See how many absences there are in the fish community data.

- | Absences in fish data

sum(spe==0)

Look at the proportion of zeros in the fish community data.

- | Proportion of zeros

sum(spe==0)/(nrow(spe)*ncol(spe))

The proportion of zeros in the dataset is ~0.5.

See the frequency at which different numbers of species occur together.

- | Species occurrences

spe.pres <- colSums(spe>0) #compute the number of sites where each species is present. hist(spe.pres, main="Species occurrence", las=1, xlab="Frequency of occurrences", breaks=seq(0,30, by=5), col="grey")

Can see that an intermediate number of sites contain the highest number of species.

Calculate the number of species that occur at each site. This is a simplistic way of calculating species richness for each site. In some cases, the number of individuals found in a site may differ between sites. Because there may be a relationship between the number of individuals counted in a site or sample and the taxa or species richness, rarefied species richness may be a more appropriate metric than total richness. The rarefy() function in vegan can be used to generate rarefied species richness.

1.2 Environmental data

Explore the environmental data and create a panel of plots to compare collinearity:

- | Explore Doubs Env data

names(env) dim(env) str(env) head(env) summary(env) pairs(env, main="Bivariate Plots of the Environmental Data" )

In this case, the environmental data (explanatory variables) are all in different units and need to be standardized prior to computing distance measures to perform most ordination analyses. Standardize the environmental data (11 variables) using the function decostand() in vegan.

- | Decostand

env.z <- decostand(env, method="standardize") apply(env.z, 2, mean) # the data are now centered (means~0) apply(env.z, 2, sd) # the data are now scaled (standard deviations=1)

2. Association measures

Matrix algebra ultimately lies below all ordinations. A matrix consists of data (e.g. measured values) in rows and columns. Prior to starting multivariate analyses, you likely have matrices with ecological data (such as the DoubsEnv or DoubsSpe that we have used before), but in ordination methods, your original data matrices are used to create association matrices. Before launching into ordination methods, it is important to spend some time with your data matrices. Creating an association matrix between objects or among descriptors allows for calculations of similarity or distance between objects or descriptors (Legendre and Legendre 2012). Exploring the possible association measures that can be generated from your data prior to running ordinations can help you to better understand what distance measure to use within ordination methods. At this point in time, it may be difficult to see the purpose behind different dissimilarity indices, or describing what they do to your multivariate data, but we will need this knowledge when we consider canonical ordination methods later on.

IN SUMMARY: To ordinate objects you need to compute distances among them. Distances can be computed in several ways, taking into account abundance or presence/absence data. More importantly, they are several properties that are important for distance metrics that are explored with the data examples below. For more information about the properties of distance metrics and some key terms, click on the hidden section below.

2.1 Distance measures

Quantitative species data We can use the vegdist() function to compute dissimilarity indices for community composition data. These can then be visualized as a matrix if desired.

- | vegdist

spe.db<-vegdist(spe, method="bray") # "bray" with presence-absence data is Sorensen dissimilarity spe.dj<-vegdist(spe, method="jac") # Jaccard dissimilarity spe.dg<-vegdist(spe, method="gower") # Gower dissimilarity spe.db<-as.matrix(spe.db) #Put in matrix form (can visualize, write to .csv etc)

A condensed version of the spe.db matrix where the numbers represent the distance(dissimilarity) between the first 3 species in DoubsSpe would look like this:

| Species 1 | Species 2 | Species 3 | |

|---|---|---|---|

| Species 1 | 0.0 | 0.6 | 0.68 |

| Species 2 | 0.6 | 0.0 | 0.14 |

| Species 3 | 0.68 | 0.14 | 0.0 |

You can see that when comparing a species to itself (e.g. Species 1 to Species 1), the distance = 0, because species 1 is like itself and distance is maximum when the species compared are 100% different.

These same distance measures can be calculated from presence-absence data by setting binary=TRUE in the vegdist() function. This will give slightly different distance measures.

You can also create graphical depictions of these association matrices using a function called coldiss.

Quantitative environmental data Let’s look at associations between environmental variables (also known as Q mode):

- | dist, environmental variables

?dist # this function also compute dissimilarity matrix env.de<-dist(env.z, method = "euclidean") # euclidean distance matrix of the standardized environmental variables windows() #Creates a separate graphical window coldiss(env.de, diag=TRUE)

We can then look at the dependence between environmental variables (also known as R mode):

- | correlations, environmental variables

(env.pearson<-cor(env)) # Pearson r linear correlation round(env.pearson, 2) #Rounds the coefficients to 2 decimal points (env.ken<-cor(env, method="kendall")) # Kendall tau rank correlation round(env.ken, 2)

The Pearson correlation measures the linear correlation between two variables. The Kendall tau is a rank correlation which means that it quantifies the relationship between two descriptors or variables when the data are ordered within each variable.

In some cases, there may be mixed types of environmental variables. Q mode can still be used to find associations between these environmental variables. We’ll do this by first creating an example dataframe:

- | Example dataframe

var.g1<-rnorm(30, 0, 1) var.g2<-runif(30, 0, 5) var.g3<-gl(3, 10) var.g4<-gl(2, 5, 30) (dat2<-data.frame(var.g1, var.g2, var.g3, var.g4)) str(dat2) summary(dat2)

A dissimilarity matrix can be generated for these mixed variables using the Gower dissimilarity matrix:

- | daisy

?daisy #This function can handle NAs in the data (dat2.dg<-daisy(dat2, metric="gower")) coldiss(dat2.dg)

Challenge 1 - Introductory Discuss with your neighbour: How can we tell how similar objects are when we have multivariate data? Make a list of all your suggestions.

Challenge 1 - Introductory Solution

Challenge 1 - Advanced Calculate the Bray-Curtis and the Gower dissimilarity of species abundance CHA, TRU and VAI for sites 1, 2 and 3 (using the “spe” and “env” dataframes) without using the decostand() function.

Challenge 1 - Advanced Solution

2.2. Transformations of community composition data

Sometimes species/community data may also need to be standardized or transformed. The decostand() function in vegan provides standardization and transformation options for community composition data.

Transforming abundance or count data to presence-absence data:

- | decostand, presence-absence

spe.pa<-decostand(spe, method="pa")

Other transformations can be used to correct for the influence of rare species, e.g. the Hellinger transformation:

- | Hellinger and Chi-square

#Hellinger transformation spe.hel<-decostand(spe, method="hellinger") #can also use method=”hell” #Chi-square transformation spe.chi<-decostand(spe, method="chi.square")

Optional Challenge 2 - Advanced Produce Hellinger and Chi-square transformed abundance data for the “spe” data without using decostand().

Optional Challenge 2 - Advanced Solution

2.3 Clustering

One application of association matrices is clustering. It is not a statistical method per se, because it does not test a hypothesis, but it highlights structures in the data by partitioning either the objects or the descriptors. As a result, similar objects are combined into groups, allowing distinctions – or contrasts – between groups to be identified. One goal of ecologists could be to divide a set of sites into groups with respect to their environmental conditions or their community composition.

Clustering results are often represented as dendrograms (trees), where objects agglomerate into groups. There are several families of clustering methods, but for the purpose of this workshop, we will present an overview of three hierarchical agglomerative clustering methods: single linkage, complete linkage, and Ward’s minimum variance clustering. See chapter 8 of Legendre and Legendre 2012 for more details on the different families of clustering methods.

In hierarchical methods, elements of lower clusters (or groups) become members of larger, higher ranking clusters, e.g. species, genus, family, order. Prior to clustering, one needs to create an association matrix among the objects. Distance matrix is the default choice of clustering functions in R. The association matrix is first sorted in increasing distance order (or decreasing similarities). Then, groups are formed hierarchically following rules specific to each method.

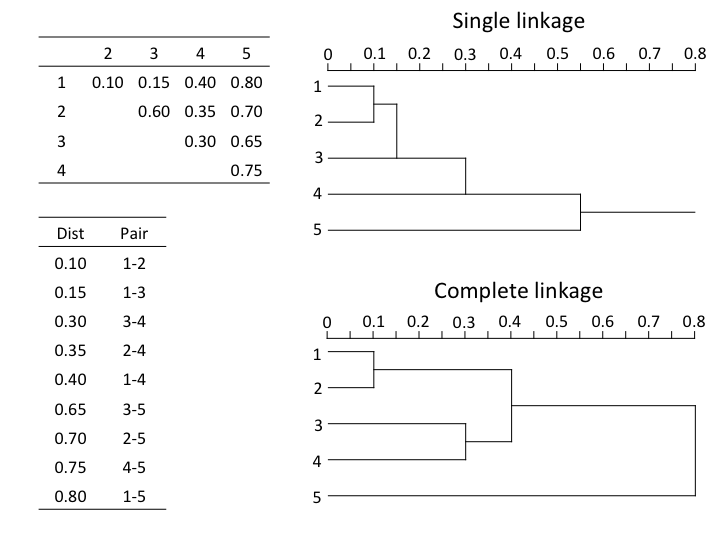

Let's take a simple example of a euclidean distance matrix between 5 objets which were sorted in ascending order.

In single linkage agglomerative clustering (also called nearest neighbour sorting), the objects at the closest distances agglomerate. The two closest objects agglomerate first, the next two closest objects/clusters are then linked, and so on, which often generates long thin clusters or chains of objects (see how objets 1 to 5 cluster successively). Conversely, in complete linkage agglomerative clustering, an object agglomerates to a group only when linked to the furthest element of the group, which in turn links it to all members of that group (in the above example, the group formed of objets 3 and 4 only clusters with group 1-2 at 0.4, a distance at which objets 1, 2, 3 and 4 are all linked). As a result, complete linkage clustering will form many small separate groups, and is more appropriate to look for contrasts, discontinuities in the data.

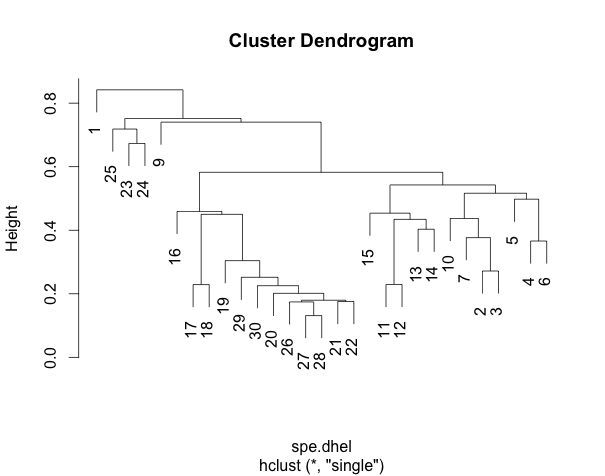

Let’s compare the single and complete linkage clustering methods using the Doubs fish species data.

Species data were already Hellinger-transformed. The cluster analysis requiring similarity or dissimilarity indices, the first step will be to generate the Hellinger distance indices.

- | vegdist, Hellinger distance

spe.dhel<-vegdist(spe.hel,method="euclidean") #generates the distance matrix from Hellinger transformed data #See difference between the two matrices head(spe.hel)# Hellinger-transformed species data head(spe.dhel)# Hellinger distances among sites

Most clustering methods can be computed with the function hclust() of the stats package.

- | hclust, comparison of single and complete linkage clustering

#Faire le groupement à liens simples #Perform single linkage clustering spe.dhel.single<-hclust(spe.dhel, method="single") plot(spe.dhel.single) #Perform complete linkage clustering spe.dhel.complete<-hclust(spe.dhel, method="complete") plot(spe.dhel.complete)

Are there big differences between the two dendrograms?

In single linkage clustering, chains of objets occur (e.g. 19, 29, 30, 20, 26, etc.), whereas more contrasted groups are formed in the complete linkage clustering.

Ward’s minimum variance clustering differ from these two methods in that it clusters objects into groups using the criterion of least squares (similar to linear models). At the beginning, each object is considered being a cluster of its own. At each step, the pair of clusters merging is the one leading to minimum increase in total within-group sum of squares.

Again, it is possible to generate a Ward’s minimum variance clustering with hclust(). However, the dendogram shows squared distances by default. In order to compare this dendrogram to the single and complete linkage clustering results, one must calculate the square root of the distances.

- | hclust, Ward's minimum variance clustering

#Perform Ward minimum variance clustering spe.dhel.ward<-hclust(spe.dhel, method="ward.D2") plot(spe.dhel.ward) #Re-plot the dendrogram by using the square roots of the fusion levels spe.dhel.ward$height<-sqrt(spe.dhel.ward$height) plot(spe.dhel.ward) plot(spe.dhel.ward, hang=-1) # hang=-1 aligns all objets on the same line

The clusters generated using Ward’s method tend to be more spherical and to contain similar numbers of objects.

One must be careful in the choice of an association measure and clustering method in order to correctly address a problem. What are you most interested in: gradients? Contrasts? In addition, the results should be interpreted with respect to the properties of the method used. If more than one method seems suitable to an ecological question, computing them all and compare the results would be the way to go. As a reminder, clustering is not a statistical method, but further steps can be taken to identify interpretable clusters (e.g. where to cut the tree), or to compute clustering statistics. Clustering can also be combined to ordination in order to distinguish groups of sites. These go beyond the scope of this workshop, but see Borcard et al. 2011 for more details.

3. Unconstrained Ordination

Unconstrained ordination allows us to organize samples, sites or species along continuous gradients (e.g. ecological or environmental). The key difference between unconstrained and constrained ordination (discussed later in this workshop- see ‘Canonical Ordination’) is that in the unconstrained techniques we are not attempting to define a relationship between independent and dependent sets of variables.

Unconstrained ordination can be used to: - Assess relationships within a set of variables (not between sets). - Find key components of variation between samples, sites, species etc. - Reduce the number dimensions in multivariate data without substantial loss of information. - Create new variables for use in subsequent analyses (such as regression). These principal components are weighted, linear combinations of the original variables in the ordination. Source

3.1 Principal Component Analysis

Principal component analysis (PCA) was originally described by Pearson (1901) although it is more often attributed to Hotelling (1933) who proposed it independently. The method and several of its implications for data analysis are presented in the seminal paper of Rao (1964). PCA is used to generate a few key variables from a larger set of variables that still represent as much of the variation in the dataset as possible. PCA is a powerful technique to analyze quantitative descriptors (such as species abundances), but cannot be applied to binary data (such as species absence/presence).

Based on a dataset containing normally distributed variables, the first axis (or principal-component axis) of a PCA is the line that goes through the greatest dimension of the concentration ellipsoid describing the multinormal distribution. Similarly, the following axes go through the dimensions of the concentration ellipsoid by decreasing length. One can thus derive a maximum of p principal axes form a data set containing p variables.

For this, PCA rotates the original system of axes defined by the variables in a way that the successive new axes are orthogonal to one another and correspond to the successive dimensions of maximum variance of the scatter of points. The new variables produced in a PCA are uncorrelated with each other (axes are orthogonal to each other) and therefore may be used in other analyses such as multiple regression (Gotelli and Ellison 2004). The principal components give the positions of the objects in the new system of coordinates. PCA works on an association matrix among variables containing the variances and covariances of the variables. PCA preserves Euclidean distances and detects linear relationships. As a consequence, raw species abundance data are subjected to a pre-transformation (i.e. a Hellinger transformation) before computing a PCA.

To do a PCA you need: - A set of variables (with no distinction between independent or dependent variables, i.e. a set of species OR a set of environmental variables). - Samples that are measured for the same set of variables. - Generally a dataset that is longer than it is wide is preferred.

PCA is most useful with data with more than two variables, but is easier to describe using a two dimensional example. In this example (derived from Clarke and Warwick 2001) , we have nine sites with abundance data for two species:

| Site | Species 1 | Species 2 |

|---|---|---|

| A | 6 | 2 |

| B | 0 | 0 |

| C | 5 | 8 |

| D | 7 | 6 |

| E | 11 | 6 |

| F | 10 | 10 |

| G | 15 | 8 |

| H | 18 | 14 |

| I | 14 | 14 |

If you plotted these samples in the two dimensions, the plot would look like this:

This is a straight forward scatter plot and is an ordination plot, but you can imagine that it is more difficult to produce and visualize such a plot if there were more than two species. In that case, you would want to reduce the number of variables to principal components. If the 2D plot above was too complex and we wanted to reduce the data into a single dimension, we would be doing so with a PCA:

In this case, the first principal component is the line of best fit through the points (sites) with the sites perpendicular to the line.

A second principal component is then added perpendicular to the first axis:

The final plot then is the two PC axes rotated where the axes are now principal components as opposed to species:

For PCAs with more than two variables, principal components are added like this (Clarke and Warwick 2001):

PC1 = axis that maximises the variance of the points that are projected perpendicularly onto the axis. PC2 = must be perpendicular to PC1, but the direction is again the one in which variance is maximised when points are perpendicularly projected. PC3 and so on: perpendicular to the first two axes. Essentially, when there are more than two dimensions, PCA produces a new space in which all PCA axes are orthogonal (e.g. where the correlation among any two axes is null) and where the PCA axes are ordered according to the percent of the variance of the original data they explain.

The “spe” data includes 27 fish taxa. To simplify the 27 fish taxa into a smaller number of fish-related variables or to identify where different sites or samples are associated with particular fish taxa we can run a PCA.

Run a PCA on Hellinger-transformed species data:

- | PCA using rda() in vegan

#Run the PCA using the rda() function (NB: rda() is used for both PCA and RDA) spe.h.pca <- rda(spe.hel) #Extract the results summary(spe.h.pca) #overall results

The summary looks like this:

Interpretation of ordination results:

A new word from this output is “eigenvalue”. An eigenvalue is the value of the change in the length of a vector, and for our purposes is the amount of variation explained by each axis in a PCA. As you can see, the summary function produces a fair amount of information. From the summary we can see how much of the variance in the data is explained by the unconstrained variables (i.e. variables where we have made no effort to define relationships between the variables). In this case, the total variance of the sites explained by the species is 0.5. The summary also tells you what proportion of the total explained variance is explained by each principal component in the PCA: the first axis of the PCA thus explains 51.33% of the variation, and the second axis 12.78%. You can also extract certain parts of the output, try this:

- | Part of the PCA summary

summary(spe.h.pca, display=NULL) # only eigenvalues and their contribution to the variance eigen(cov(spe.hel)) # also compute the eigenvalues

Sometimes you may want to extract the scores (i.e. the coordinates within a PCA biplot) for either the “sites” (the rows in your dataset, whether they be actual sites or not) or the “species” (the variables in your data, whether they be actual species or some other variables). This is useful if you want to then use a principal component as a variable in another analysis, or to make additional graphics. For example, with the “spe” dataset, you might want to obtain a single variable that is a composite of all the fish abundance data and then use that use that variable in a regression with another variable, or plot across a spatial gradient. To extract scores from a PCA, use the scores() function:

- | scores()

spe.scores <- scores(spe.h.pca, display="species", choices=c(1,2)) # species scores on the first two PCA axes site.scores <- scores(spe.h.pca, display="sites", choices=c(1,2)) # sites scores on the first two PCA axes #Note: if you don’t specify the number of principal components to extract (e.g. choices=c(1,2) or choices=c(1:2) then all of the scores will be extracted for all of the principal components.

The PCA on the “spe” fish data produces as many principal components as there are fish taxon (columns), which in this case means that 27 principal components are produced. In many cases though, you may have done a PCA to reduce the number of variables to deal with and produce composite variables for the fish. In this case, you are likely interested in knowing how many of these principal components are actually significant or adding new information to the PCA (i.e. how many principal components do you need to retain before you aren’t really explaining any more variance with the additional principal components). To determine this, you can use the Kaiser-Guttman criterion and produce a barplot showing at what point the principal components are no longer explaining significant amount of variance. The code for the barplot below shows the point at which the variance explained by a new principal component explains less than the average amount explained by all of the eigenvalues:

- | Significant axes

# Identify the significant axis using the Kaiser-Guttman criterion ev <- spe.h.pca$CA$eig ev[ev>mean(ev)] n <- length(ev) barplot(ev, main="Eigenvalues", col="grey", las=2) abline(h=mean(ev), col="red") legend("topright", "Average eigenvalue", lwd=1, col=2, bty="n")

From this barplot, you can see that once you reach PC6, the proportion of variance explained falls below the average proportion explained by the other components. If you take another look at the PCA summary, you will notice that by the time you reach PC5, the cumulative proportion of variance explained by the principal components is 85%.

A PCA is not just for species data. It can also be run and interpreted in the same way using standardized environmental variables:

- | PCA on environmental variables

#Run the PCA env.pca <- rda(env.z) # or rda(env, scale=TRUE) #Extract the results summary(env.pca) summary(env.pca, scaling=2)

Scaling refers to what portion of the PCA is scaled to the eigenvalues. Scaling = 2 means that the species scores are scaled by eigenvalues, whereas scaling = 1 means that site scores are scaled by eigenvalues. Scaling = 3 means that both species and site scores are scaled symmetrically by square root of eigenvalues. Using scaling = 1 means that the Euclidean distances among objects (e.g. the rows in your data) are preserved, whereas scaling = 2 means that the correlations amongst descriptors (e.g. the columns in this data) are preserved. This means that when you look at a biplot of a PCA that has been run with scaling=2, the angle between descriptors represents correlation.

- | Significant axes

# Identify the significant axis using the Kaiser-Guttman criterion ev <- env.pca$CA$eig ev[ev>mean(ev)] n <- length(ev) barplot(ev, main="Eigenvalues", col="grey", las=2) abline(h=mean(ev), col="red") legend("topright", "Average eigenvalue", lwd=1, col=2, bty="n")

Compare this eigenvalue barplot with the one you created for the species PCA.

As you saw in the explanation of the summary output, a lot of information can be extracted from a PCA before even plotting it. However, many of us interpret data best visually and a figure of a PCA is often the best way to convey major patterns. PCA biplots can be plotted in base R. A PCA biplot includes the x-axis as the first Principal Component and the y-axis as the second Principal Component.

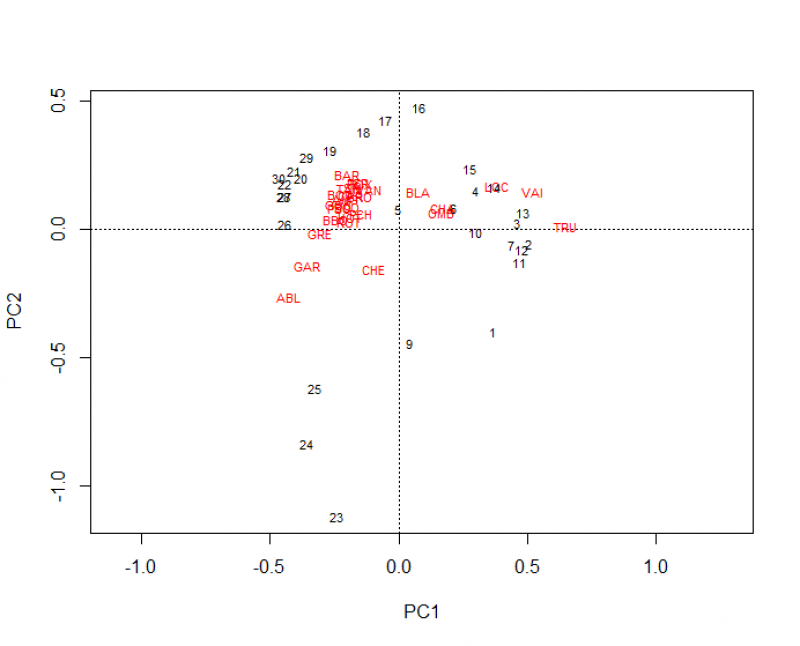

For some plotting exercises, let's start with the species PCA. A basic biplot without any customization could be plotted like this, where the site positions are shown by black numbers and species’ positions are shown by red species codes. Remember, species positions come from plotting species along PCs and the site positions are derived from the weighted sums of species scores.

- | Plot species PCA

plot(spe.h.pca)

Basically, the plot above plots the PCA like this:

- | Plot species PCA line by line

plot(spe.h.pca, type="n") #produces a blank biplot with nothing displayed but the axes points(spe.h.pca, dis="sp", col="blue") #points are added for the species (columns) (dis=) #use text() instead of points() if you want the labels points(spe.h.pca, dis="sites", col="red") #points are added for the sites (rows)

To create more detailed plots and to play with aesthetics, try this code:

- | More aesthetically pleasing PCA plot

#Biplot of the PCA on transformed species data (scaling 1) windows() plot(spe.h.pca) windows() biplot(spe.h.pca) windows() plot(spe.h.pca, scaling=1, type="none", # scaling 1 = distance biplot : # distances among objects in the biplot approximate their Euclidean distances # but angles among descriptor vectors DO NOT reflect their correlation xlab = c("PC1 (%)", round((spe.h.pca$CA$eig[1]/sum(spe.h.pca$CA$eig))*100,2)), #this comes from the summary ylab = c("PC2 (%)", round((spe.h.pca$CA$eig[2]/sum(spe.h.pca$CA$eig))*100,2))) points(scores(spe.h.pca, display="sites", choices=c(1,2), scaling=1), pch=21, col="black", bg="steelblue", cex=1.2) text(scores(spe.h.pca, display="species", choices=c(1), scaling=1), scores(spe.h.pca, display="species", choices=c(2), scaling=1), labels=rownames(scores(spe.h.pca, display="species", scaling=1)), col="red", cex=0.8)

This code produces 3 plots, the final plot is the most visually pleasing:

What conclusions can you draw from this plot? From the plot, you can see the site scores shown by the blue dots. If you superimposed site labels on top, you could see what sites are closer to each other in terms of the species found at those sites, but even without the specific labels, you can see that there are only a few sites that are farther away from the majority. The species names are shown by their names in red and from the plot, you can see for example that the species “ABL” is not found or not found in the same prevalence in the majority of sites as other species closer to the centre of the ordination.

Biplots need to be interpreted in terms of angles from center of plot, not just in terms of proximity. Two variables at 90 degree angle are uncorrelated. Two variables in opposing directions are negatively correlated. Two variables very close together are strongly correlated, at least in the space described by these axes.

Now let's look at a plot of the environmental PCA:

- | Biplot of environment PCA

#Biplot of the PCA on the environmental variables (scaling 2) windows() plot(env.pca) windows() plot(env.pca, scaling=2, type="none", # scaling 2 = correlation biplot : # distances among abjects in the biplot DO NOT approximate their Euclidean distances # but angles among descriptor vectors reflect their correlation xlab = c("PC1 (%)", round((env.pca$CA$eig[1]/sum(env.pca$CA$eig))*100,2)), ylab = c("PC2 (%)", round((env.pca$CA$eig[2]/sum(env.pca$CA$eig))*100,2)), xlim = c(-1,1), ylim=c(-1,1)) points(scores(env.pca, display="sites", choices=c(1,2), scaling=2), pch=21, col="black", bg="darkgreen", cex=1.2) text(scores(env.pca, display="species", choices=c(1), scaling=2), scores(env.pca, display="species", choices=c(2), scaling=2), labels=rownames(scores(env.pca, display="species", scaling=2)), col="red", cex=0.8)

Remember that a PCA biplot is really just a scatter plot, where the axes are scores extracted from composite variables. This means that there are many different ways you can plot a PCA. For example, try using your ggplot() skills from Workshop 4 to extract PCA scores and plot an ordination in ggplot.

Use of PCA axis as composite explanatory variables:

In some cases, users want to reduce several environmental variables in a few numbers of composite variables. When PCA axes represent ecological gradients (i.e. when environmental variables are correlated with PCA axis in a meaningful way), users can use site scores along PCA Axis in further analyses instead of the raw environmental data. In other words, sites scores along PCA Axis represent linear combinations of descriptors and can consequently be used as proxy of ecological conditions in “post-ordination” analyses. In the example above, the first PCA axis can be related to a gradient from oligotrophic, oxygen-rich to eutrophic, oxygen-deprived water: from left to right, a first group display the highest values of altitude (alt) and slope (pen), and the lowest values in river discharge (deb) and distance from the source (das). The second group of sites has the highest values in oxygen content (oxy) and the lowest in nitrate concentration (nit). A third group shows intermediate values in almost all the measured variables. If users are then interested in identifying if a specific species is associated with ologotrophic or eutrophic water, one can correlate the species abundances to sites scores along PCA Axis 1. For example, if one want to assess if the species TRU prefers oligotrophic or eutrophic water, the following linear model can be used :

- | Response of TRU species to the first ordination gradient

Sites_scores_Env_Axis1<- scores(env.pca, display="sites", choices=c(1), scaling=2) spe$ANG plot( Sites_scores_Env_Axis1, spe$TRU) summary(lm(spe$TRU~Sites_scores_Env_Axis1)) abline(lm(spe$TRU~Sites_scores_Env_Axis1))

This simple model shows that the abundance of species TRU is significantly dependent of site scores along PCA axis 1 (t = -5.30, p = 1.35e-05, adj-R2 = 49.22%), i.e. depends of an oligotrophic-eutrophic gradient. The following plot identifies a preference of this species for oligotrophic water.

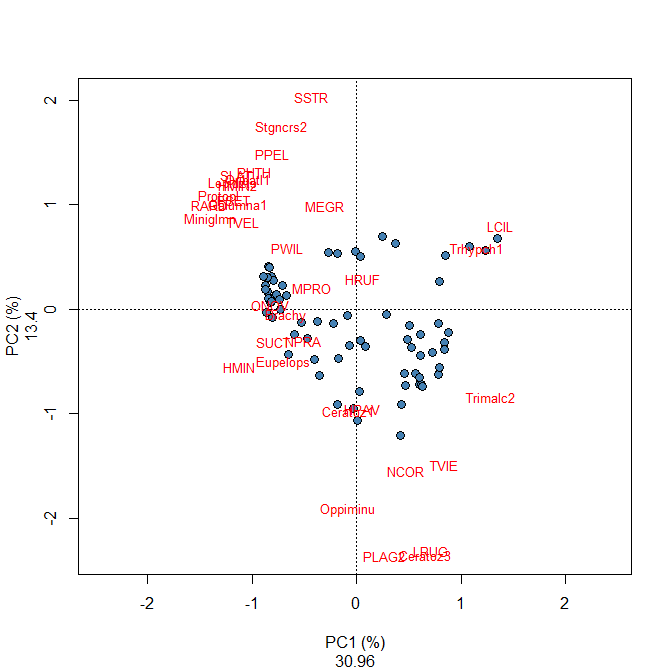

Challenge 3 Run a PCA of the “mite” species abundance data. What are the significant axes of variation? Which groups of sites can you identify? Which species are related to each group of sites? Use:

- | Mite species data

mite.spe <- mite #mite data is from the vegan package

Challenge 3 - Solution

3.2. Correspondence Analysis

One of the key assumptions made in PCA is that species are related to each other linearly and that they respond linearly to ecological gradients. This is not necessarily the case with a lot of ecological data (e.g. many species have unimodal species distributions). Using PCA on data with unimodal species distributions or a lot of zero values may lead to a phenomenon called the “horseshoe effect”, and can occur with long ecological gradients. As such, a CA or Correspondence Analysis may be a better option for this type of data, see Legendre and Legendre (2012) for further information. As CA preserves Chi2 distances (while PCA preserves Euclidean distances), this technique is indeed better suited to ordinate datasets containing unimodal species distributions, and has, for a long time, been one of the favourite tools for the analysis of species presence–absence or abundance data. In CA, the raw data are first transformed into a matrix Q of cell-by-cell contributions to the Pearson Chi2 statistic, and the resulting table is submitted to a singular value decomposition to compute its eigenvalues and eigenvectors. The result is an ordination, where it is the Chi2 distance that is preserved among sites instead of the Euclidean distance in PCA. The Chi2 distance is not influenced by the double zeros. Therefore, CA is a method adapted to the analysis of species abundance data without pre-transformation. Contrary to PCA, CA can also be applied to analyze both quantitative and binary data (such as species abundances or absence/presence). As in PCA, the Kaiser-Guttman criterion can be applied to determine the significant axes of a CA, and ordination axes can be extracted to be used in multiples regressions.

Run a CA on species data:

- | A using cca() in vegan

#Run the CA using the cca() function (NB: cca() is used for both CA and CCA) spe.ca <- cca(spe) # Identify the significant axes ev<-spe.ca$CA$eig ev[ev>mean(ev)] n=length(ev) barplot(ev, main="Eigenvalues", col="grey", las=2) abline(h=mean(ev), col="red") legend("topright", "Average eigenvalue", lwd=1, col=2, bty="n")

From this barplot, you can see that once you reach C6, the proportion of variance explained falls below the average proportion explained by the other components. If you take another look at the CA summary, you will notice that by the time you reach CA5, the cumulative proportion of variance explained by the principal components is 84.63%.

- | Extract the CA results

summary(spe.h.pca) #overall results summary(spe.h.pca, diplay=NULL)# only axis eigenvalues and contribution

CA results are presented in a similar manner as PCA results. You can see here that CA1 explains 51.50% of the variation in species abundances, while CA2 explain 12.37% of the variation.

- | Construct the CA biplots

par(mfrow=c(1,2)) #### scaling 1 plot(spe.ca, scaling=1, type="none", main='CA - biplot scaling 1', xlab=c("CA1 (%)", round((spe.ca$CA$eig[1]/sum(spe.ca$CA$eig))*100,2)), ylab=c("CA2 (%)", round((spe.ca$CA$eig[2]/sum(spe.ca$CA$eig))*100,2))) points(scores(spe.ca, display="sites", choices=c(1,2), scaling=1), pch=21, col="black", bg="steelblue", cex=1.2) text(scores(spe.ca, display="species", choices=c(1), scaling=1), scores(spe.ca, display="species", choices=c(2), scaling=1), labels=rownames(scores(spe.ca, display="species", scaling=1)),col="red", cex=0.8) #### scaling 2 plot(spe.ca, scaling=1, type="none", main='CA - biplot scaling 2', xlab=c("CA1 (%)", round((spe.ca$CA$eig[1]/sum(spe.ca$CA$eig))*100,2)), ylab=c("CA2 (%)", round((spe.ca$CA$eig[2]/sum(spe.ca$CA$eig))*100,2)), ylim=c(-2,3)) points(scores(spe.ca, display="sites", choices=c(1,2), scaling=2), pch=21, col="black", bg="steelblue", cex=1.2) text(scores(spe.ca, display="species", choices=c(1), scaling=2), scores(spe.ca, display="species", choices=c(2), scaling=2), labels=rownames(scores(spe.ca, display="species", scaling=2)),col="red", cex=0.8)

These biplots show that a group of sites located in the left part with similar fish community characterized by numerous species such as GAR, TAN, PER, ROT, PSO and CAR; in the upper right corner, an other site cluster characterized by the species LOC, VAI and TRU is identified; the last site cluster in the lower right corner of the biplot is characterized by the species BLA, CHA and OMB.

Challenge 4 Run a CA of the “mite” species abundance data. What are the significant axes of variation? Which groups of sites can you identify? Which species are related to each group of sites?

Challenge 4 - Solution

3.3. Principal Coordinates Analysis

PCA as well as CA impose the distance preserved among objects: the Euclidean distance (and several others with pre-transformations) for PCA and the Chi2 distance for CA. If one wishes to ordinate objects on the basis of another distance measure, more appropriate to the problem at hand, then PCoA is the method of choice. In PCA we rotated and plotted our data so that as much variation was explained by a first Principal Component and we can see how much “species”, either actual species or environmental variables, contribute to each component by looking at the scores (also called loadings). Another type of unconstrained ordination is called Principal Coordinate Analysis (PCoA). In PCoA, points are added to plane space one at a time using Euclidean distance (or whatever distance (dissimilarity) metric you choose). Basically, one point is added, then a second so that it's distance is correct from the first point and then the third point and so on adding as many axes (dimensions) as necessary along the way. Choosing between PCA and PCoA can be tricky, but generally PCA is used to summarize multivariate data into as few dimensions as possible, whereas PCoA can be used to visualize distances between points. PCoA can be particularly suited for datasets that have more columns than rows. For example, if hundreds of species have been observed over a set of quadrats, then a approach based on a PCoA using Bray-Curtis similarity (see below) may be best suited.

Run a PCoA on the Hellinger transformed species abundances (back to DoubsSpe):

- | cmdscale for PCoA

#Using cmdscale() ?cmdscale cmdscale(dist(spe.hel), k=(nrow(spe)-1), eig=TRUE) #Using pcoa() ?pcoa spe.h.pcoa <- pcoa(dist(spe.hel)) # Extract the results spe.h.pcoa #Construct the biplot biplot.pcoa(spe .h.pcoa, spe.hel, dir.axis2=-1)

The output looks like this (and here here is a video that might help with the explanation of eigenvalues in terms of ordination):

And the PCoA biplot, like this:

You can also run a PCoA using a different distance measure (e.g. Bray-Curtis). Here is a PCoA run on a Bray-Curtis dissimilarity matrix:

- | PCoA with Bray-Curtis

spe.bray.pcoa <- pcoa(spe.db) #where spe.db is the species dissimilarity matrix using Bray-Curtis. spe.bray.pcoa biplot.pcoa(spe.bray.pcoa, spe.hel, dir.axis2=-1) #Note that the distance measure chosen strongly influences the results.

Challenge 5 Run a PCoA on the Hellinger-transformed mite species abundance data. What are the significant axes? Which groups of sites can you identify? Which species are related to each group of sites? How do the PCoA results compare with the PCA results?

Challenge 5 - Solution

3.4 Nonmetric MultiDimensional Scaling

The unconstrained ordination methods presented above allow to organize objects (e.g. sites) characterized by descriptors (e.g. species) in full-dimensional space. In other words, PCA, CA and PCoA computes a large number of ordination axes (proportional to the number of descriptors) representing the variation of descriptors among sites and preserve distance among objects (the Euclidean distances in PCA, the Chi2 distances in CA and the type of distances defined by the user in PCoA). Users can then select the axis of interest (generally the first two ones as the explained the larger part of the variation) to represent objects in an ordination plot. The produced biplot thus represents well the distance among objects (e.g. the between-sites similarity), but fails to represent the whole variation dimensions of the ordination space (as Axis3, Axis4,…, Axisn are not represented on the biplot, but still contribute to explain the variation among objects).

In some case, the priority is not to preserve the exact distances among sites, but rather to represent as accurately as possible the relationships among objects in a small and number of axes (generally two or three) specified by the user. In such cases, nonmetric multidimensional scaling (NMDS) is the solution. If two axes are selected, the biplot produced from NMDS is the better 2D graphical representation of between-objects similarity: dissimilar objects are far apart in the ordination space and similar objects close to one another. Moreover, NMDS allows users to choose the distance measure applied to calculate the ordination.

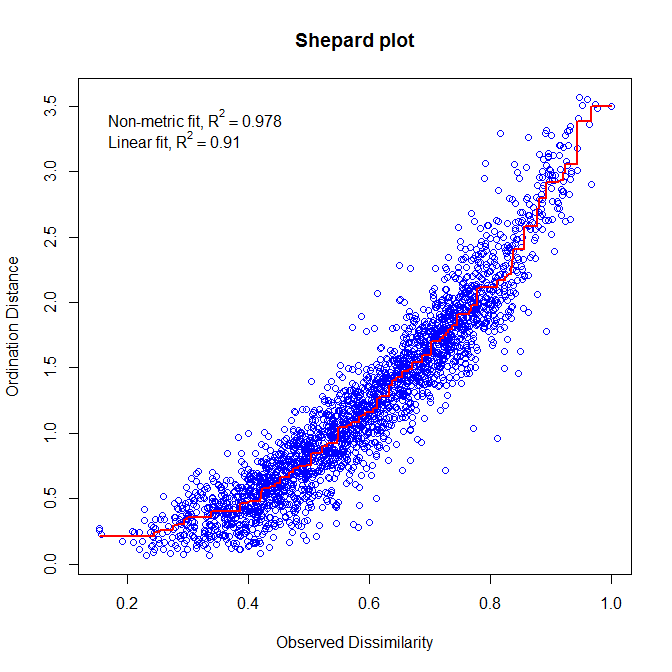

To find the best representation of objects, NMDS applies an iterative procedure that tries to position the objects in the requested number of dimensions in such a way as to minimize a stress function (scaled from 0 to 1) which measure the goodness-of-fit of the distance adjustment in the reduced-space configuration. Consequently, the lower the stress value, the better the representation of objects in the ordination-space is. An additional way to assess the appropriateness of an NDMS is to construct a Shepard diagram which plot distances among objects in the ordination plot against the original distances. The R2 obtained from the regression between these two distances measure the goodness-of-fit of the NMDS ordination.

- | NMDS on spe dataset using Bray-Curtis distance and k=2 ordination axes

# Run the NMDS spe.nmds<-metaMDS(spe, distance='bray', k=2) ### Extract the results spe.nmds ### Assess the goodness of fit and draw a Shepard plot spe.nmds$stress stressplot(spe.nmds, main='Shepard plot') # Construct the biplot windows() plot(spe.nmds, type="none", main=paste('NMDS/Bray - Stress=', round(spe.nmds$stress, 3)), xlab=c("NMDS1"), ylab=c("NMDS2")) points(scores(spe.nmds, display="sites", choices=c(1,2)), pch=21, col="black", bg="steelblue", cex=1.2) text(scores(spe.nmds, display="species", choices=c(1)), scores(spe.nmds, display="species", choices=c(2)), labels=rownames(scores(spe.nmds, display="species")), col="red", cex=0.8)

The Shepard plot identifies a strong correlation between observed dissimilarity and ordination distance (R2 > 0.95), highlighting a high goodness-of-fit of the NMDS.

The biplot of the NMDS shows a group of closed sites characterized by the species BLA, TRU, VAI, LOC, CHA and OMB, while the other species form a cluster of sites in the upper right part of the graph. Four sites in the lower part of the graph are strongly different from the others.

Challenge 6 Run the NMDS of the mite species abundances in 2 dimensions based on a Bray-Curtis distance. Assess the goodness-of-fit of the ordination and interpret the biplot.

Challenge 6 - Solution

3.5. Summary

Ordination is a powerful statistical tecnhique for studying the relationships of objects characterized by several descriptors (e.g. sites described by biotic communities, or environmental variables), but serveral ordination methods exist. These methods mainly differ in the type of distance preserved, the type of variables allowed and the dimensions of the ordination space. To better guide your choice of the ordination method to use, the following table identify the main characteristics of the four ordination methods presented :

In the next workshop, you will see how to identify the relationships between biotic communities and sets of environmental variables describing the same sites, using canonical analyses.